Technology

As AI enters Pennsylvania classrooms, teachers and students face a learning curve

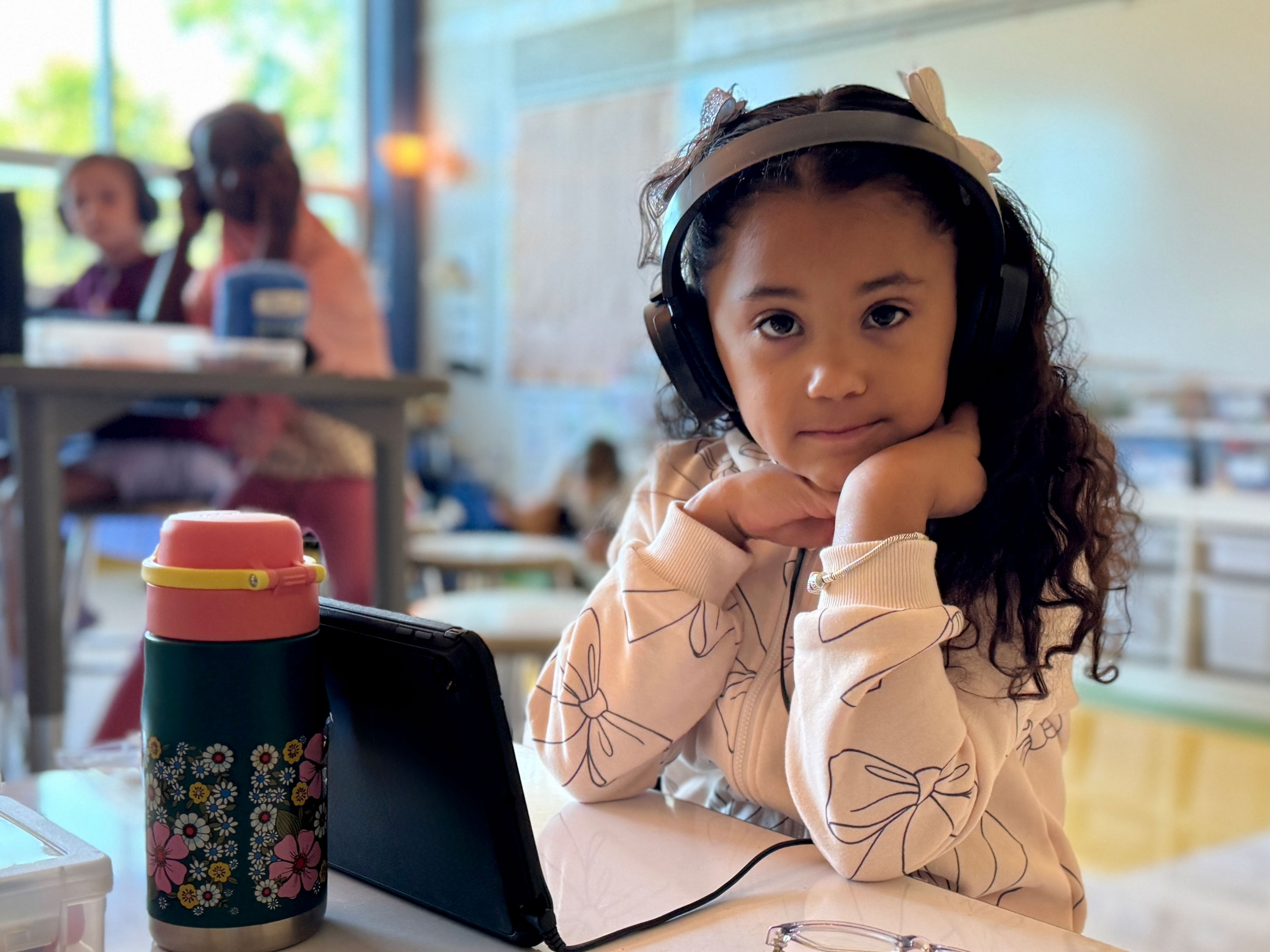

Pennsylvania schools are racing to determine the role artificial intelligence should play in education – and when.

peshkov/Getty Images

In nearly a decade of teaching artificial intelligence to schoolchildren, David Touretzky has encountered his fair share of skeptics asking him why kindergarteners need to be taught about artificial intelligence. “And my answer is: We’re not introducing AI to kindergartners,” the Carnegie Mellon University computer science professor explained. “By the time these kids get to kindergarten, they’ve already spent two years talking to Alexa.”

Touretzky is the founder of AI4K12, an organization that, since 2017, has led a movement to teach AI to children in kindergarten through high school. Relatively new and poorly understood by the general public, artificial intelligence is a vast and rapidly expanding digital subtext that increasingly powers many of our routine interactions – from the GPS in our cars to the algorithms behind YouTube and Netflix to the Grammarly app that polishes many an email.

It is also a burgeoning industry locally: Pennsylvania has positioned itself as a hub for AI-powering data centers, and Gov. Josh Shapiro has piloted cutting-edge AI tools in state government, touting partnerships with OpenAI and with the state’s leading research institutions like Carnegie Mellon University and the University of Pennsylvania.

Against this backdrop, more and more educators across the commonwealth are coming around to Touretzky’s way of thinking: Since AI is already part of everyday lives, it will – and should – also be a part of public education. Accordingly, a growing number of Pennsylvania districts are taking a proactive approach to teaching both students and educators what AI is and how to use it.

Among other things, AI tools can help students generate ideas for assignments; draft exams and suggest grades for busy teachers; tailor practice exercises to students’ particular needs; provide feedback on ideas and projects; and illuminate topics, from marine exploration to the interior of human cells, through virtual reality experiences that far surpass the capacities of a textbook or even a webpage.

“Every day, it evolves,” noted Luke Orlando, a self-described early adopter of technology who incorporates AI into his middle-school English classes in East Stroudsburg. “Last year, if you were teaching about AI in your classroom, you were proactive. This year, if you’re using AI in your classroom, you’re reactive. That’s how fast the paradigm is shifting.”

Much of the public discourse regarding AI in education has centered around an obvious negative consequence: student cheating enabled by ChatGPT and other generative AI chatbots. The bots use what are known as large language models, meaning they scan and draw from the internet’s continually accumulating wealth of material to produce the desired content.

Free and almost absurdly simple to use, the bots spit out convincing term papers, answer questions on just about any topic and summarize books in little more than the time it takes students to type “Tell me the plot of Homer’s ‘Iliad.’”

All of this has prompted considerable handwringing over a generation that increasingly outsources thinking and writing to machines – not to mention the implications for social development, human interaction and the teaching profession. Still, while many in the education community are wary of AI, consensus is growing around the need to address a technology that feels inevitable – and, ideally, to harness its potential for good.

“With social media, we ignored it,” recalled Christopher Clayton, who, since 2012, has directed education services for the Pennsylvania State Education Association. “We by and large did not bring that into school … When I talk with our members, I say, ‘Are we going to have this happen again with AI? Or are we going to be intentional this time?’”

Clayton, who holds a doctorate in language and literacy education, is a former high school and college English teacher, which has afforded him an ideal vantage point to see what he calls the “massive impact” of technological innovation on both teaching and writing.

Five years ago, he shepherded the association’s 177,000 members through the online transition prompted by the COVID-19 pandemic. That was the first major digital upheaval for a profession that had barely changed its essential practices in decades, if not centuries. The sector had barely recovered from that seismic shock when ChatGPT was launched in late 2022, unleashing a whole new set of challenges.

Clayton had been hosting workshops on emerging technologies for several years when, in May 2024, the PSEA’s annual House of Delegates meeting organized a 20-strong task force to study AI. The result, published a year later, was the Artificial Intelligence Task Force Impact Report, which outlined recommendations and resources around issues from legal concerns and teacher training to security and academic integrity.

Those guidelines were sorely needed in a commonwealth that, like 21 other states, lacks a formal statewide framework around educational AI. “In Pennsylvania, local control is a part of that,” Clayton noted, referring to a decentralized public school network. “That makes it incumbent upon 500 school districts to each lay the groundwork for clear policies for staff and for students. And that means we’re going to have 500 different places that we’re at across the state.”

The early adopters

One of those places is the Mount Pleasant Area School District, southeast of Pittsburgh, where the administration has embraced AI’s challenge. Kevin Svidron, a 36-year veteran who chairs the Mount Pleasant Area Senior High School social studies department, and his protegé, second-year teacher David Greene, encourage their students to “use AI to structure your learning – diving in for deeper analysis and understanding,” Greene said.

Svidron provides his students with detailed instructions on utilizing AI feedback to develop critical thinking. “That’s really important – that the educator instructs on how to properly use it,” he said, rather than simply “expect them to use or not use it.”

Recently, one of his AP macroeconomics students – senior Susan Babick – was impressed by Svidron’s demonstration of how AI could use her vague descriptions to generate useful images for a slideshow. But last year, she saw the technology’s downside when another teacher thought an essay she submitted had been machine-generated – and the teacher’s AI-checker programs offered conflicting analyses that failed to exonerate her. “That was really scary,” Babick recalled.

Another Mount Pleasant student, junior Lucas Poole, said he uses AI to generate practice exercises for chemistry, helping him to “fill in the gaps” outside of class. He also finds AI helpful in generating ideas or frameworks at the beginning of an assignment, since, as he observed, “most people struggle with finding a place to start.”

Still, Svidron is frustrated by what he views as a lagging embrace of technology by teachers. That dynamic can be especially pronounced in rural districts, he said, where mundane concerns often trump the allure of cutting-edge technologies – and where, as a consequence, people may fail to understand AI’s import.

Touretzky has run into similar resistance from Pittsburgh-area educators, who, he said, have shown little interest in his organization’s education about a technology innovated largely in its backyard (Carnegie Mellon is a longtime leader in robotics research, and Pittsburgh’s startup scene has nurtured AI-powered apps like Uber and Duolingo).

Instead, AI4K12 has found a receptive audience in the Sun Belt. Districts in Georgia, Texas and Florida have signed on to Touretzky’s middle school curriculum, with other states lining up for its weeklong teacher training workshops.

AI knowledge “is now part of being an educated adult,” opined Touretzky. “Some people call AI the new electricity. And just as adults should know what atoms and molecules are, where electricity comes from, they now also need to know how AI works.

“We know it’s going to have this tremendously disruptive effect on society,” he added. “It’s already changing our ideas about what kind of jobs will exist. It’s wreaking all kinds of social changes – deepfakes, for instance, mean you can no longer trust the videos that you see.”

In the Capital Region, the Mechanicsburg Area School District has taken that message to heart. Shortly after arriving in 2024, Assistant Superintendent Chris Bowman convened a steering committee to consider its approach to AI – and scrutinize the potential impact on educational practices, data privacy and equity.

At first, the committee aspired “to assess every single tool,” Bowman recalled. “And then we were like, ‘Time out. There’s no way that we’re going to be able to keep up with the evolution of AI.’”

So the group instead created guidelines around what they hoped AI would do – “enrich teaching, learning and communication, without compromising the social interactions and human decision-making that foster … healthy learning communities,” as Bowman summarized. The Mechanicsburg team also created a rubric for evaluating a given practice’s AI impact based on a scale of 0 to 3, “with 0 being no AI use at all … and 3 being full use of AI with human oversight,” Bowman explained.

He recently put those principles into practice in his own home, helping his son, a high school senior, use AI to generate feedback on college application essays. “I showed him, if we put this into AI, here’s the prompts that we would use,” Bowman recalled of how they instructed the chatbot to assess the essays’ strengths and weaknesses from the perspective of a college admissions counselor. His son “was pretty blown away,” he said.

An app for everything

ChatGPT may be the best-known AI chatbot, but across Pennsylvania, districts are subscribing to a variety of apps that utilize machine learning to enhance education, both in the classroom and beyond.

For students, several popular tools use AI to individualize tutoring and academic support. They include School AI and Khanmigo, the offering from the well-established educational software outfit Khan Academy.

Many districts now provide their teachers with MagicSchool, a suite of 80-plus tools that assist with everything from lesson plans to assessments – even a joke generator, “if you just want to start class with a corny teacher joke every day,” said Clayton, the PSEA education director.

Teachers also get AI help with quizzes, practice tests and curriculum planning with Brisk, a Google Chrome extension that seamlessly coordinates with the other Google apps many public schools already rely on to track assignments and grades. To speed upgrading, many educators use Class Companion, another AI tool.

And then there’s Skipper. She’s the MagicSchool-powered chatbot that Luke Orlando created over the summer to coach his middle-school English students through their writing projects at East Stroudsburg’s J.T. Lambert Intermediate School.

Skipper, a “female avatar,” likes skateboarding and music and wears a backwards baseball cap. The relatable persona makes some students more comfortable going to a chatbot for help with their essays – including, as Orlando recalled, one shy middle-schooler whose back-and-forth with Skipper clarified spelling, grammar and organizational issues to help craft an A-graded paper. “I literally did not talk to this student once,” marveled the teacher. “I have 20- or 30-something students in a classroom, all of whom need individual help, some more than others. What this program allows me to do is differentiate for the students who need more personalized assistance from a human, whereas this other student was entirely capable of learning on her own.”

Orlando shuts Skipper off when he wants students to rely on “good old-fashioned human research.” Afterwards, he turns her back on to evaluate the students’ progress: Are the results on topic? Are the sources reliable? “In the end, (students) still have to do the analysis,” he said.

The shadow of Big Tech

The information generated by AI tools isn’t entirely neutral; in many cases, it’s filtered through algorithms designed by companies such as Google, whose dominance in many classrooms raises questions about privacy – and the impact on children who grow up immersed in a corporate interface. In school districts like Philadelphia, students do their work on district-issued Google Chromebooks, while assignments are posted and submitted through the Google Classroom app – meaning the company runs the classroom, both literally and figuratively.

“Big Tech is struggling for who’s going to become a dominant force in the market,” observed Clayton. “I have big worries around corporate indoctrination … If we’re not careful, Big Tech will make sure this happens to us, not with us.”

That sentiment was echoed by Mike Soskil, a Wayne County elementary STEM teacher and Pennsylvania’s 2017 Teacher of the Year. People “think AI literacy is teaching kids how to use AI – but I think it’s more important for kids to understand what their role is when they interact with it, what data is being collected and how it’s being used,” said Soskil, who serves on the PSEA’s AI task force. “What are the implicit biases that are built into AI models … and what are the profit motives of companies that are putting AI in front of you?”

Those questions become more urgent in places where a single company can dominate local education. Google’s footprint is particularly heavy in the Delaware Valley: Its products are used throughout the School of District of Philadelphia, Pennsylvania’s largest district, and it recently announced a $1 million grant through its philanthropic arm, Google.org, to expand the University of Pennsylvania Graduate School of Education’s “Pioneering AI in School Systems” program. The grant will extend that partnership, currently with the School District of Philadelphia, to five additional districts in the region.

L. Michael Golden, the education school’s vice dean for innovative programs and partnerships, said Penn scholars had been working closely with the School District of Philadelphia for the past year to “demystify some of the misconceptions around AI, understand some of the risks inherent, but also some of the promise.” His team advises district educators on how to make informed choices as they establish guidelines and policies around machine learning tools.

For Philadelphia, the biggest priority has been cybersecurity, Golden said. The district selected Google’s AI tool, Gemini, as its platform, but opted for a “walled garden” approach, meaning that its user data stays within a closed circuit and does not feed back into the large language model. “They’re securing the safety and privacy of the data that goes into it,” Golden said. “That’s a choice they’ve made. Other districts are making different choices and using different platforms.”

Those choices can be especially tricky in cash-strapped public school districts, which have myriad competing priorities – from the cash crunch stemming from Harrisburg’s four-month-long budget impasse to workforce shortages and aging facilities. AI also presents ethical challenges related to issues that schools are already grappling with, including access and infrastructure, as well as the rural-urban digital divide.

Technological innovation “has to fit into all the other things that are happening,” Golden observed. “But it’s incumbent upon all of us to take a proactive stance and not be reactive. Tech companies shouldn’t decide what policy should be employed. Many people should be in that conversation.”

Perils along with promise

Data privacy is a major technical concern – but for most laypeople, from educators to parents and the students themselves, the main anxiety around AI is “a real erosion of cognitive ability if kids start to overly rely on it,” as Clayton put it, citing risks identified in academic studies.

Marilyn Pryle, who holds an education doctorate and directs professional learning for the East Stroudsburg Area School District, is one of the professionals concerned about the core values of education in the age of machine learning. “As teachers, we’re fighting to preserve that human connection…and it’s just getting so hard,” lamented the 2019 Pennsylvania Teacher of the Year.

A major culprit, she noted, is the ever-escalating and emotionally stressful battle between students’ AI cheating and teachers’ AI-checker tools, which purport to identify the percentage of a submitted assignment that can be traced to other online materials – suggesting that a chatbot like ChatGPT plays a disproportionately large role in the struggle.

“It’s this cat-and-mouse thing that we will never, ever win,” said Pryle, who until last June was a 10th-grade English teacher. “The nature of education is so transactional – the kids perceive it as, ‘I turn in this piece of paper, you give me this grade.’

“The kids don’t perceive the meaningfulness in education,” she added. “And so our discussion should not be, ‘How can we catch them and force them to do the thinking?’ but instead, ‘How can we get them to be more intrinsically connected to their own learning?’”

From his classroom in the Wallenpaupack Area School District, Soskil shares those concerns. “What I’ve found is that AI, especially generative AI, can be a great tool for learning – but it doesn’t necessarily mean that it’s a great tool for education,” he said. “Learning and education aren’t the same thing. We can learn our multiplication tables, how to make a law, how to pull the thermonuclear bomb – but knowing any one of them doesn’t necessarily make us educated, right? Education involves critical thinking, being able to make connections across different aspects of the curriculum … and to solve problems.”

Pryle, who also serves on the PSEA’s AI task force, believes tools like MagicSchool can play a role in that quest – by, for instance, helping students to edit their essays or generate exercises to improve their grammar skills. But she cautioned that AI grading can be frustratingly inconsistent with human expectations, and that students “freak out” at the idea that real teachers aren’t assessing their work.

For their part, teachers, who often face a steep learning curve using novel technologies, worry that AI will replace them in the classroom altogether. While other white-collar workers have spent decades in front of computers, classroom teaching was a relatively tech-free zone until the pandemic. Before they can deploy student-facing technology, teachers must first become proficient in it.

Then there are the concerns around AI accuracy. In interviews, numerous educators recounted experiments that revealed a significant error rate in AI-generated results – a finding unlikely to surprise anyone who has used AI searches.

In East Stroudsburg, Orlando worries more about those inaccurate or incomplete AI results than about “replacing certain aspects of how students think.”

“Ever since Spellcheck came into the world, everybody has been worse at spelling,” Orlando said. “The same with calculators – every new technology, right? Even something as simple as fire: We outsource part of our digestion to fire. We cook meat beforehand, break down those proteins so that we don’t have to, and sterilize it in the process, so our immune system doesn’t have to work as hard.

“The question that a lot of people have is, ‘Is this the final straw? Are we finally going to be delegating pretty much everything to AI?’ he asked. “I think being proactive about it is one way to reduce those concerns – (learning) upfront what AI can do, what it is, how to be utilizing it ethically and safely.”

Soskil shares that hope, but as the AI landscape evolves, he is feeling less and less sanguine. A technology enthusiast since his early teaching days, he used videoconferencing decades ago to connect Pennsylvania classrooms with cultures around the world. More recently, Soskil analyzed machine learning’s educational potential in the 2017 book he co-authored, “Teaching in the Fourth Industrial Revolution.”

“Our premise at the time was that it was going to be really important to keep humanity centered in education,” he said. “My fear with where we’re headed right now is that AI is really being pushed into classrooms, not for that purpose, but rather for efficiency. If I’m outsourcing (individualized education) to a machine or to an algorithm … I may be able to do it quicker, but I’m not doing it better.

“The way that I see AI being used in society, and specifically in education,” he added, “leads me to fear that we’re losing some of the really important human aspects of what it means to be educated.”

NEXT STORY: PA businesses are all in on AI